This post is also available in:

![]() Português

Português

Many people are still reluctant to use the Oracle export Data Pump (expdp) in 10g because there is no effective method of compression via pipe as there is in simple export command (exp). Starting in version 11g, Oracle added the parameter "COMPRESS=ALL" that helps in compressing the dump file generated, but still uses the internal mechanisms for compression without allowing the user to choose the best form of compression. It also requires you to have the Advanced Compression Option.

To solve this, I developed a script that performs a parallel compression of the files generated by expdp dumps, doing efficiently as the pipe and using the parameter of maximum dump file size of the expdp, also taking advantage of the parallelism parameter.

1) How the script works?

When calling the expdp, we define a value for the parameter "FILESIZE", for example, of 1GB:

eg: expdp full=Y directory=... dumpfile=expfile.%u.dmp logfile=expfile.log filesize=1G

Thus, assuming that its total dump file is 30 GBs, the expdp will generate the first 1GB file and when finished, will start to record the second file. At this time, a parallel compression script will act in the first file generated while the second file is being recorded by the database. Compression script can use bzip2, gzip or any other algorithm of your choice and existing on your server.

The script can also take advantage of the "PARALLEL" parameter and start recording several files in parallel. As they are coming to the defined "FILESIZE", compression will be performed in the background making the export much faster:

eg: expdp full=Y directory=... dumpfile=expfile.%u.dmp logfile=expfile.log filesize=1G parallel=8

This script, support specific schema export. He performs as default a FULL database with one of the following commands (depending on the chosen parameterization):

- EXPDP: expdp full=y directory=... dumpfile=... logfile=... filesize=... parallel=...

- EXP: exp file=... log=... full=y consistent=y compress=n buffer=9999999 statistics=none direct=y

2) Requirements

- The script has been tested on Linux, HP-UX and Solaris. Actually, the basic requirement is that there are primary unix commands (cd, mktemp, mkfifo, awk, head, etc).

- Necessary that the database to be backed up is registered in the oratab file (for exp and expdp) or in TNSNAMES file (for remote exp).

- The compression tool desired must be installed (gzip, bzip2, zip, compress, ...).

3) How to install?

Download it here!

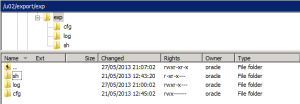

Unzip the directory "exp" in the folder you want to configure Data Pump. Subdirectories will only have shell scripts, configuration files and logs. The dumps will be generated on a configurable destination.

The folder has 3 basic subdirectories:

- SH folder - Contains the shells responsible for export and compression. It is not necessary to change them.

- CFG folder - Contains the configuration files for each instance parameterized.

- LOG folder - Contains the logs of executions.

The SH folder contains 4 shells:

- expcron.sh - This shell only centralizes the execution of everything and saves the log. This is the script that will be called.

- expfull.sh - This shell has the entire Oracle export logic process.

- expdpcompress.sh - This shell has all Oracle dumps logic compression.

- expfunctions.sh - This shell has all methods and functions that will be used by the other scripts.

The CFG folder contains the configuration file of the instance:

- orcl.cfg - The file name should be exactly the same as the instance name you are backing up in the oratab file, appending ".cfg". If this file does not exist, the process attempts to find a file named "default.cfg" in that same directory.

The LOG folder is initially empty and contains the executions logs.

Set the permissions on the directories and files according to the below:

[oracle@ec12mntrjr001 exp]$ ls -laR ./ ./: total 24 drwxr-x--- 5 oracle dba 4096 Mar 24 12:09 . drwx------ 15 oracle oinstall 4096 Mar 24 12:08 .. drwx------ 2 oracle dba 4096 Mar 24 12:08 cfg drwxr-x--- 2 oracle dba 4096 Mar 24 12:08 log dr-xr-x--- 2 oracle dba 4096 Mar 24 12:08 sh ./cfg: total 12 drwx------ 2 oracle dba 4096 Mar 24 12:08 . drwxr-x--- 5 oracle dba 4096 Mar 24 12:09 .. -rw------- 1 oracle dba 1138 Aug 23 2013 default.cfg ./log: total 8 drwxr-x--- 2 oracle dba 4096 Mar 24 12:08 . drwxr-x--- 5 oracle dba 4096 Mar 24 12:09 .. ./sh: total 36 dr-xr-x--- 2 oracle dba 4096 Mar 24 12:08 . drwxr-x--- 5 oracle dba 4096 Mar 24 12:09 .. -r-xr-x--- 1 oracle dba 2268 Aug 28 2013 expcron.sh -r-xr-x--- 1 oracle dba 4885 Nov 28 11:25 expdpcompress.sh -r-xr-x--- 1 oracle dba 8818 Aug 29 2013 expfull.sh -r-xr-x--- 1 oracle dba 3699 Nov 28 11:35 expfunctions.sh [oracle@ec12mntrjr001 exp]$

4) Configuring

The configuration file "oracle_sid.cfg" existing in the cfg folder (where oracle_sid is the SID of the instance to be backed up) requires the following parameters:

- EXPTYPE

Optional parameter that specifies the type of export and Oracle binary to be called ("exp" or "expdp"). Accepts the values "EXP" or "EXPDP". If omitted, the default value "EXP" will be used.

Eg: EXPTYPE=EXPDP

- EXPRETENTION

Mandatory parameter that determines the retention time of the old backups, in days. After the end of the process, dump files before "EXPRETENTION" days will be deleted.

Eg: EXPRETENTION=3

- EXPUSER

Mandatory parameter that defines the user and password that will connect the database to perform the export. It is recommended to create a user exclusive for this purpose, giving the user only the grants required, as follows:

create user expuser identified by asb378 default tablespace USERS temporary tablespace TEMP profile DEFAULT quota unlimited on users; grant exp_full_database to expuser; grant create session to expuser; grant create table to expuser;

Eg: EXPUSER=expuser/asb378

- EXPDEST

Mandatory parameter that defines the folder where the dump will be written. This folder must also exist within the instance if the variable "EXPTYPE" is "expdp", as follows:

create or replace directory EXPDP1 as '/u02/export/dump'; grant read,write on directory EXPDP1 to expuser;

Eg: EXPDEST=/u02/export/dump

- COMPALG

Mandatory parameter that determines the command line to run the compression tool. Currently, only supports Gzip or Bzip2.

Bzip2 is slower but the file is much smaller. If the export type is "expdp", this compression will occur in the background at the end of each part. If "exp", the compression will run through PIPE.

Eg: COMPALG="bzip2 -9"

- EXPPARALLEL

Mandatory parameter if the value of "EXPTYPE" variable is "EXPDP". Specifies the amount of jobs that will run parallel export, being passed to the parameter "PARALLEL" of "expdp" command. Do not exceed 2x the number of CPUs. If you are generating a small dump, leave as "1". If the variable "EXPTYPE" is "EXP" or null, this parameter is ignored.

Eg: EXPPARALLEL=2

- EXPFILESIZE

Mandatory parameter if the value of "EXPTYPE" variable is "EXPDP". Specifies the maximum size of each dump file being passed to the parameter "FILESIZE" of "expdp" command. Do not leave too small because the maximum limit are 99 files. The size must be specified in the format of GBs. If the variable "EXPTYPE" is "EXP" or null, this parameter is ignored.

Eg: EXPFILESIZE=3G

- TARCOMPACT

Mandatory parameter if the value of "EXPTYPE" variable is "EXPDP". Specifies whether in the end of the process, all the export files compressed will be joined into a single TAR (1= Yes, 0=No). If the variable "EXPTYPE" is "EXP" or null, this parameter is ignored.

Eg: TARCOMPACT=0

- RESOLVTNS

Optional parameter and only applies if the value of "EXPTYPE" variable is "EXP" or null. Specifies the database connection string inside TNSNAMES file, if you wish to run backup of a remote server. Only works for "exp", not "expdp". If the variable "EXPTYPE" is "EXPDP" and this parameter is specified, the process will show an alert and stops.

Eg: RESOLVTNS=orcl.world

- EXPPARAM

Optional parameter that alters the default call of "exp" and "expdp" commands. If omitted, the default value will be:

- EXPDP:EXPPARAM="full=Y flashback_time=systimestamp"

- EXP: EXPPARAM="full=y consistent=y compress=n statistics=none direct=y buffer=9999999"

Eg: EXPPARAM="full=y consistent=y compress=n statistics=none direct=y buffer=9999999 rows=n"

- EXPDEBUG

Optional parameter that will generate all the execution trace inside the log file. If omitted, the default value will be 0 (OFF).

Eg: EXPDEBUG=1

Scenarios samples:

a) Example configuration to export data pump (expdp) with no executions in parallel, maximum size for each dump is 3 GBs.

EXPTYPE=EXPDP EXPRETENTION=7 EXPUSER=expuser/asb378 EXPDEST=/u02/export/dump COMPALG="bzip2 -9" EXPPARALLEL=1 EXPFILESIZE=3G TARCOMPACT=0

b) Example configuration to export data pump (expdp) with 8 executions in parallel, maximum size for each dump is 2 GBs.

EXPTYPE=EXPDP EXPRETENTION=7 EXPUSER=expuser/asb378 EXPDEST=/u02/export/dump COMPALG="bzip2 -9" EXPPARALLEL=8 EXPFILESIZE=2G TARCOMPACT=0

c) Example configuration for simple export (exp).

EXPTYPE=EXP EXPRETENTION=7 EXPUSER=expuser/asb378 EXPDEST=/u02/export/dump COMPALG="bzip2 -9"

d) Example configuration for simple structure export (exp) of SCOTT schema.

EXPTYPE=EXP EXPRETENTION=7 EXPUSER=expuser/asb378 EXPDEST=/u02/export/dump COMPALG="bzip2 -9" EXPPARAM="owner=scott consistent=y compress=n statistics=none direct=y buffer=9999999 rows=n"

e) Example configuration for simple remote export (exp) through TNSNAMES.

EXPTYPE=EXP EXPRETENTION=7 EXPUSER=expuser/asb378 EXPDEST=/u02/export/dump COMPALG="bzip2 -9" RESOLVTNS=orcl.world

5) Running

To execute the export script, we run the shell expcron.sh passing as a parameter the instance sid exactly as written in the oratab file.

Thus, the script will fetch the configuration folder for the file "oracle_sid.cfg" or the file "default.cfg", if the first one does not exist.

Example of punctual execution:

[oracle@orasrv ~]$ cat /etc/oratab # orcldb:/u01/app/oracle/product/11.2.3/db_1:Y [oracle@orasrv ~]$ $EXPORT_HOME/sh/expcron.sh orcldb & [1] 17637$

The execution log is written in the log folder "$EXPORT_HOME/log".

Example of backup scheduled in crontab (most common), running every day at 21h:

[oracle@orasrv ~]$ crontab -l 00 21 * * * /u02/export/exp/sh/expcron.sh orcldb

NOTE 1: The script does not run on servers that have two identical ORACLE_SID in different ORACLE_HOME's. The script also does not accept to run as root, for security reasons.

NOTE 2: If the export method is simple remote export (exp) via TNSNAMES, obviously there is no requirement for the presence of the SID in oratab file. The single parameter of the "expcron.sh" command will only be used to locate the configuration file "oracle_sid.cfg" and it will read the string name inside TNSNAMES files.

6) Script output

In addition to the log file generated in the directory "$EXPORT_HOME/log", the output of the script is obviously a compressed DUMP file (if "exp") or multiple compressed DUMPS files inserted into a folder created by the process (if "expdp"). If the variable "TARCOMPACT" has been set to "1", the entire directory will be placed in a container ".tar" file.

The log of the export output will also be generated along with the dump file.

7) Conclusion

Now we can run the expdp with in a efficient way, compressing files. If the compression is too slow, try changing the second parameter of the "gzip" tool (or "bzip2") to "-- fast" (or "-1"). If the files are too big, do the opposite, switch to "-- best" (or "-9").

Try it and leave below any questions or suggestions.

Download it here!

40 comments

Skip to comment form

Hi,

Very good article about expdp and compress...We have AIX system...When do you plan to support AIX?

Thanks and regards...

Author

Hi David, I'm glad you enjoyed.

I don't have any AIX system running DBs to adapt the code, but as all of them are UNIX based, I believe not many changes are necessary!

Regards,

Rodrigo

Your scripts have saved me a lot of work, as I was going to implement something much like this. I have done some enhancements by making the gzip/bzip process able to write it's archives to a different location (on an NFS file system in my use case).

Would you like me to send the changes back to you? If not, can I publish my version on my blog and link back to yours?

Author

I am very glad that the script have helped you. Please send me your version and you can also post it and link me back!

Regards,

Rodrigo

Gents, obvious hint! In order to avoid "ping-pong" of emails with modified scripts - why not to just upload them all to GitHub! (instead of current form - ZIP file, downloadable directly from current blog). That way all manual work - discussion of changes here in comments, then mail(s) with changed scripts, then manual merge of changes into master branch, then publishing for everybody updated version etc. - all that can be simply made on GitHub in a form of Pull Requests. No need to describe IMHO too much, as everybody already knows how all that works on GitHub. Sorry for mentioning so obvious ideas - just I was a bit surprised when found that instead of GitHub, for scripts distribution used ZIP with direct download from current Blog. Even current post is from 2015 year, but now outside just started 2022 year.

Thanks for sharing script, I am trying your script to compress 10TB DB in 10g and import to 12c, will impdp of 12c can load compress file *.dmp files or do I need to uncompress and make them available? your answer will help in setting direction for our upgrade. Thanks again

Author

Hi Bhushan,

You'll have to uncompress them in *.dmp files before using the 12c impdp. If you want to avoid the creation of "dmp" files to save space, I would recommend using datapump impdp through db link.

Regards,

Rodrigo

hello,

I have a little trouble lately with the script.

I got the following error while far everything was ok:

>>> Oracle log:

....

Traitement du type d'objet SCHEMA_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS

ORA-31693: Echec du chargement/déchargement de l'objet de données de table "NXTP1"."ECC_AUD_AUDIENCE" ; l'objet est ignoré en raison de l'erreur :

ORA-31617: impossible d'ouvrir le fichier de vidage "/u02/app/oracle/dump/EXPDP_NXTDB_FULL_20141210_222928.01.dmp" pour écriture

ORA-19505: échec d'identification du fichier "/u02/app/oracle/dump/EXPDP_NXTDB_FULL_20141210_222928.01.dmp"

ORA-27037: impossible d'obtenir l'état du fichier

Linux-x86_64 Error: 2: No such file or directory

Additional information: 3

. . export : "NXTP1"."ECC_DEM_DEMAND" 1.543 GB 14928555 lignes

......

>>> Script log:

...

2014-12-10 22:29:28 - Checking O.S...

2014-12-10 22:29:28 - O.S: Linux

2014-12-10 22:29:28 - Loading parameters "NXTDB.cfg"

2014-12-10 22:29:28 - Calling ORAENV...

The Oracle base remains unchanged with value /u01/app/oracle

2014-12-10 22:29:28 - Checking file extension...

2014-12-10 22:29:28 - Extension: bzip2...

2014-12-10 22:29:30 - Starting EXPDP of instance NXTDB...

2014-12-10 22:29:30 - Loading custom expdp parameters: "SCHEMAS=nxtp1"

2014-12-10 22:29:30 - Calling parallel script of compression...

2014-12-10 22:29:30 - Beginning of the process to compact EXPDP...

2014-12-10 22:36:03 - Compacting EXPDP_NXTDB_FULL_20141210_222928.01.dmp

2014-12-10 22:51:35 - Compacting EXPDP_NXTDB_FULL_20141210_222928.02.dmp

2014-12-10 23:11:51 - Compacting EXPDP_NXTDB_FULL_20141210_222928.03.dmp

2014-12-10 23:29:18 - Compacting EXPDP_NXTDB_FULL_20141210_222928.04.dmp

2014-12-10 23:37:25 - Export command returned: 5

2014-12-10 23:37:25 - Cleaning files older than 1 days...

2014-12-10 23:37:25 - Waiting the end of compress script...

....

I feel that the first file is already compressed and removed while Oracle has always needed.

I do not have a full backup but only a schema.

I have had this error by implementing the pbzip2 compression tool in your script, then I'm fine with bzip2. bzip2 has worked well so far.

Have you any idea?

Author

Somehow the expdp utility tried to reopen 1st Dump file even after it has reached the maximum size and there was no process locking it. It is like coming back to do something in an already generated file.

I have no workaround for this, as the script can not predict if oracle is going to do that.

I think I've already faced it before and what I did to solve was changing the size to a smaller value and increasing the number of parallel threads.

Regards,

Rodrigo

Thank you very much for your comments.

I can not use the parallel setting because I do not have an Oracle enterprise version.

The error is truly random and I did not try to restore the backup. Perhaps it is correct.

Thank you again for your script.

Regards,

Pascal

Same problem for me.

expdp needs to write in file 01 at the end of process.

File 01 should not be processed while expdp is running.

Dear @Rodrigo, once I saw current post - even before reaching end of post, I have asked myself, something like "interesting, how was resolved issue with _post-update_ of already generated Dump files, until whole Export process not completed". And when I found discussion of that issue here in Comnents, it becomes obvious that issue really exists and you and your users hit exactly it.

Pre-history: some time ago I have worked on some migration project via Data Pump Export and Import - within it I did manually exactly what you have realized and automated with your scripts - I have worked on large DP Export, and there was lack of free space on destination folder, where pointed Directory object, used by Data Pump. So, during many test runs I have used following method - I have manually compressed generated DP Dumps, once they was completed, and deleted them immediately after compression completed, in order to freed up disk space. I have used FILESIZE parameter, let's say 1G value. All generated Dump files was exactly of the same size - like 1 073 741 824 bytes (which is 1 GB). And during tests I found interesting behavior (like already mentioned in comment above) - even generated and completed Dump file(s) did not change its size, at some moment changed timestamp! I do not remember - was it only about first Dump file, or some Dumps "in between" was updated - but general rule, seems, can be formulated as following: during its work, Data Pump Export creates Dump files with exactly requested file size, as specified by FILESIZE parameter, very accurately. And that size did not change afterwards, once file created and size is as requested. But, during its work, seems, at some moment, Data Pump "goes back" to already created Dump file(s) - and update inside them some data, some fields (?), probably pointer(s) to other Dumps, to create "chain" or "sequence" of Dump files, so that each file points to the next one (like "linked list" or so). Or maybe first file contains kind of "contents" (links or pointers?) - Metadata info about all other Dump files in the set.

Few details additionally to that rule - I found that (and comments here partially confirm that):

* once each Dump file created, if you compress it immediately - then whole Dump files set will be corrupted, and DP Import will fail

** there could be few exceptions and probably only Import of the whole Dumps set (schemas, segments etc.) would fail - but separate Import of particular objects potentially can succeed; but for sure, that is not "production" solution and should NOT be recognized for use, only being lucky you can extract particular objects

* if you compress AND immediately delete each Dump file before whole Export process completes - then whole DP Export process can fail, once Data Pump Export process will try again to access some of the completed Dumps (only first Dump file?) - like already mentioned and showed in Comments

All that is "dark zone", all I have written here about my findings. Normally only MOS can answer such questions, if at some moment there would be posted appropriate Note with explanation, why Data Pump Export process goes back to already generated Dump files and updates them. And what exactly its updating there. Sure, someone of course can find answers, at least can try - open Dump file and try to "hack" it in HEX editor, trying to find structures inside it, logic, format, fields and so on. But I have another great idea!! Taking into account latest changes in your @Rodrigo professional status (https://www.dbarj.com.br/en/2021/06/heading-to-the-mothership/) - I think, later or sooner, after onboarding will be "completely completed" and you will have some free time (and wish!) for finding answers - probably, being "inside Oracle", you will be able to reach internal Development Team, responsible for Data Pump - and get out of them realized logic, why Data Pump Export "touch" exported Dumps and what its changing inside them. Or maybe you will have access to source code, and will find answers on your own. However, as far as I know, newcomers to Oracle got access to source code after 1 year of work in Corporation - but that was info from 20 years ago, maybe everything related to that topic changed ten times already since then.

Author

Hi Rosti, I will check internally and get back here soon =]

By the way, those scripts were created 8 to 9 years back.. and there was no update since then. So there is a lot of improvements recommended.

Hi Rodrigo,

Any update about this subject? I really interested in how can we compress these files while expdp is executing, thanks a lot...

Author

Hi Carlos, sorry for late reply. It's been a while since my last access. We are planning to implement a new feature for that, however, meanwhile, the best way to avoid getting error is NOT COMPRESSING dump files where the MASTER TABLE is stored (as this dump is updated during the full dp process). You can do it writing some code that will check DBMS_DATAPUMP.KU$_DFHDR_MASTER_PRESENT: https://docs.oracle.com/database/121/SUTIL/GUID-5AAC848B-5A2B-4FD1-97ED-D3A048263118.htm#SUTIL977

To use Data Pump Compression you need to purchace the Advanced Compression Option

for Enterprise Edition. You can not just use it without licencing this option.

Author

Hi Paul.

Thanks for reminding! But this article deals with another compression method.

Regards

Hi DBA RJ,

This looks quite interesting. My question is though how is the import being handled then?

My impression is that you'd need to provide the disc space you would have needed without compression anyways, when doing an import!

Kind regards

David

Author

Hi David,

Yes you are absolutely right. For restoration, we'd need a disk space that could handle all the files uncompressed.

Regards,

Rodrigo

can you please send me the usage of the script and i dont have log files or dumpfiles in the database

i am doing an experiment on sun solaris 10g

Author

Hi Sidd,

In this post I give some examples. Take a look and see if it applies to your case.

Regards,

DBA RJ

Nice script!!!

I really love the idea to compress exdp "chunks".

I'm on HP-UX and I had to add this line:

DPDIR=$(echo $DPDIR_PRE|tr -d '\r')

because in DPDIR a newline was added give an error in the expdp command later.

Still great script.

Author

Thanks!

Hi, thank you for sharing this nice post and scripts, grateful for that.

Failed in AIX, then I managed to test it successfully in Linux.

Foued

Very good tool RJ.

Author

Thanks Rajkishore!

I have a problem with your scripts on linux. In log i see only 2 lines:

- Beginning of theexport process....

- One argument is needed.

Can you help me?

Author

Hello Rafal,

Check instructions above. You are not passing the required parameter to the script.

Thanks,

Rodrigo

Hi,

Wich parameters You mean?

BR,

Rafal

Author

Hi Rafal,

When you call expcron.sh or expfull.sh, you need to pass a command line parameter.

Thanks,

RJ

Is this script working on Oracle Standard Edition?

Author

Hi Mikser, "Parallel Data Pump Export/Import" is not available in SE, but you can still use the script to get the OS encryption of your backups.

Boa tarde,

Ele pode ser utilizado na standard para export e import?

Author

Pode! No entanto, o paralelismo que é feature de EE não irá funcionar e vai precisar trabalhar com apenas 1 thread.

Abcs

Hi, thank you for sharing, I need to modify the script to export with expdp not full if not tables in a schema, any ideas?

Hi

Thanks for the script

It's very helpful

Just verifying ... you do not delete the original dump file immediately after zipping ? only at the end ?

That the main reason for me to use zip during expdp - to avoid from occupying large disk space during the expdp ...